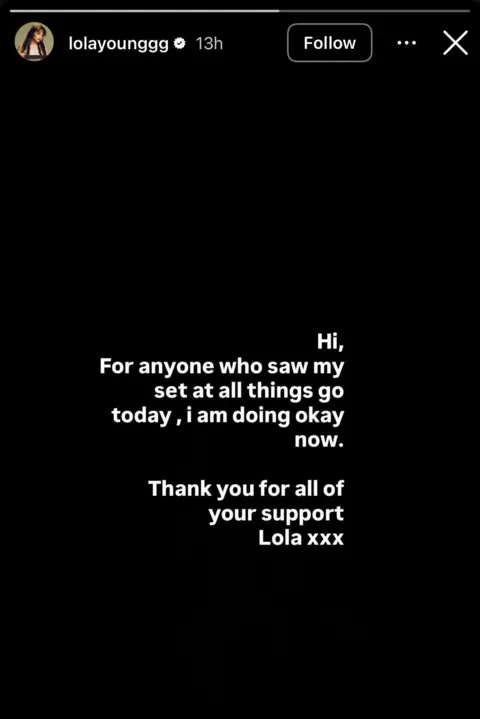

Instagram users have recently voiced their frustration after being wrongly accused and subsequently banned for breaching child sexual abuse rules. Reports indicate that this mislabeling has caused "extreme stress" for affected individuals, leading to the loss of access to important memories and even impacting their livelihoods.

Several users shared their experiences with the BBC; they emphasized the emotional strain of being labeled as violators of child exploitation policies. Many of these accounts are powered by artificial intelligence, which has come under scrutiny for its failures in moderation. A multitude of users have formed online communities, urging Meta to address this issue after a substantial number of complaints surfaced.

One individual, David from Scotland, recounted how he lost access to over ten years' worth of photos and messages due to an erroneous ban. He highlighted the stigma surrounding the accusations, explaining how hard it was to discuss his situation with family and friends. After media attention on his case, his account was swiftly reinstated, showcasing a hasty apology from Meta.

Another user, Faisal, a London student, expressed how his budding career in the creative field was jeopardized by an uncontested suspension, leading to feelings of isolation and mental distress. After involving the BBC, his account was also restored in a matter of hours.

Despite the public outcry about the flawed moderation process, Meta has been largely reticent about specific cases and whether its AI systems are malfunctioning. Critics emphasize that the lack of clarity in their policies remains a major issue, suggesting it may stem from changes in community guidelines and insufficient appeal processes.

These incidents highlight the pressing need for social media platforms to reevaluate their safety measures and develop more reliable systems for protecting users while ensuring fair treatment.

Several users shared their experiences with the BBC; they emphasized the emotional strain of being labeled as violators of child exploitation policies. Many of these accounts are powered by artificial intelligence, which has come under scrutiny for its failures in moderation. A multitude of users have formed online communities, urging Meta to address this issue after a substantial number of complaints surfaced.

One individual, David from Scotland, recounted how he lost access to over ten years' worth of photos and messages due to an erroneous ban. He highlighted the stigma surrounding the accusations, explaining how hard it was to discuss his situation with family and friends. After media attention on his case, his account was swiftly reinstated, showcasing a hasty apology from Meta.

Another user, Faisal, a London student, expressed how his budding career in the creative field was jeopardized by an uncontested suspension, leading to feelings of isolation and mental distress. After involving the BBC, his account was also restored in a matter of hours.

Despite the public outcry about the flawed moderation process, Meta has been largely reticent about specific cases and whether its AI systems are malfunctioning. Critics emphasize that the lack of clarity in their policies remains a major issue, suggesting it may stem from changes in community guidelines and insufficient appeal processes.

These incidents highlight the pressing need for social media platforms to reevaluate their safety measures and develop more reliable systems for protecting users while ensuring fair treatment.