Elon Musk's AI model Grok will no longer be able to edit photos of real people to show them in revealing clothing in jurisdictions where it is illegal, following widespread concern over sexualized AI deepfakes.

We have implemented technological measures to prevent the Grok account from allowing the editing of images of real people in revealing clothing such as bikinis, reads an announcement on X, which operates the Grok AI tool.

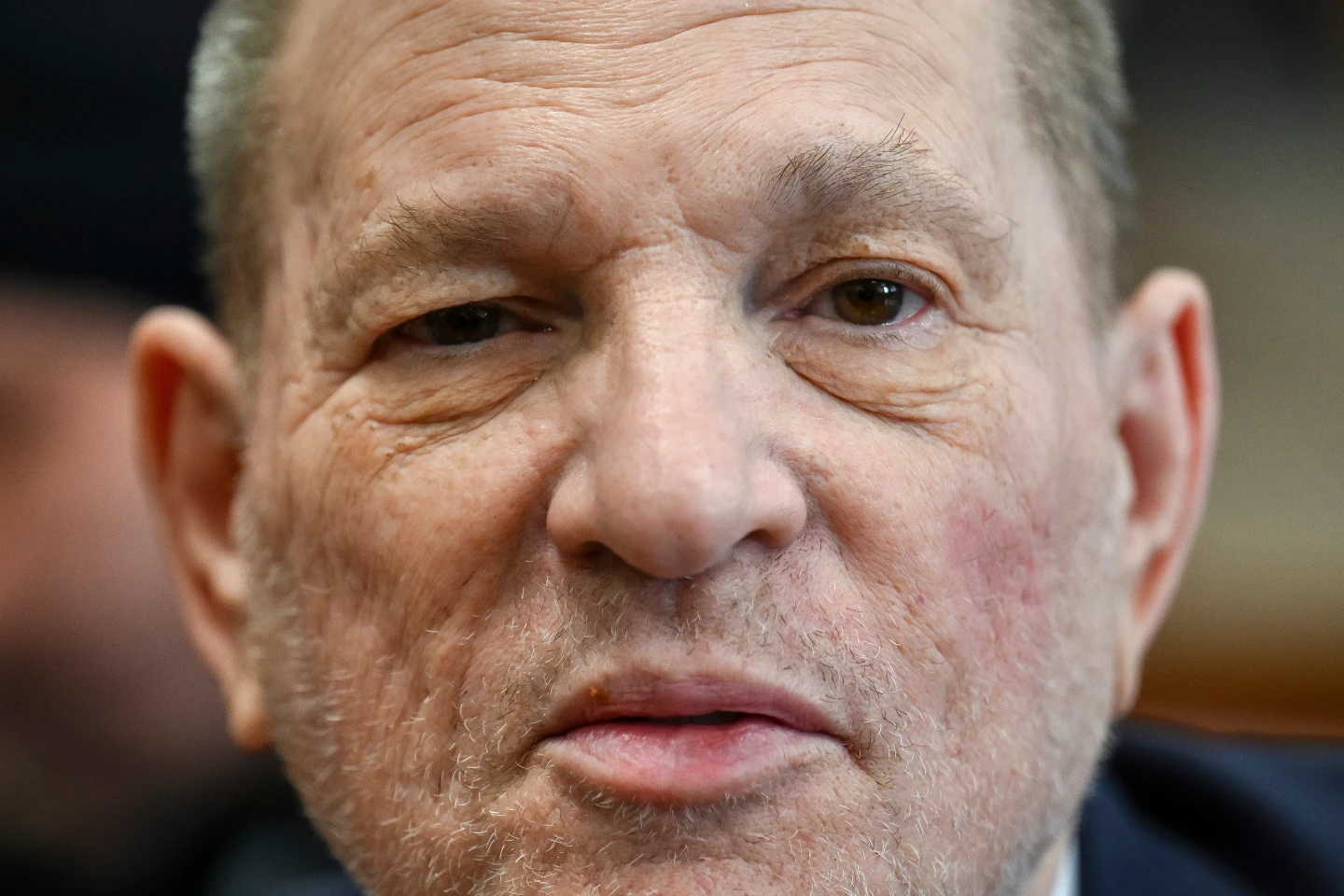

This change was quickly put into place after California's top prosecutor announced a probe into the spread of sexualized AI deepfakes, including distressing instances involving children.

We now geoblock the ability of all users to generate images of real people in bikinis, underwear, and similar attire via the Grok account in those jurisdictions where it's illegal, X explained in a statement.

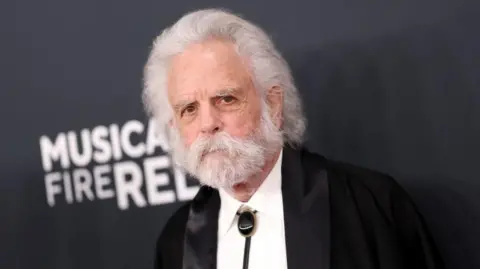

Only paid users will be able to edit images using Grok on the platform, introducing restrictions targeted at preventing abuse of the AI system. With NSFW settings, Grok is supposed to permit upper body nudity of imaginary humans in line with R-rated film standards, but users remain concerned about how enforcement will play out.

Critics have noted that Grok's editing features should have been removed as soon as reports of misuse surfaced, while global leaders have increasingly condemned the tool. Malaysia and Indonesia have already banned Grok due to its capabilities that allow for potentially explicit image generation without consent